🤓 Hey folks! I am Hengyuan Zhang (张恒源 in Chinese), a first-year PhD student at University of Hong Kong. Before going to college, I grew up in Xiamen, a beautiful coastal city in China.

📚 My research interests revolve around the application of Natural Language Processing (NLP) and Data Mining in specialized domains such as Multilingualism, Education, and Cognitive Science. I aim to approach these studies in an interpretable manner, seeking deeper insights into complex phenomena.

🧐 I am also particularly intrigued by the decision-making mechanisms integrated within models, eager to unravel their inner workings and enhance transparency. All in all, I aim to improve the speciality and interpretability of models, so as to make them more powerful and trustworthy in real-world applications.

📮 I am keen on exploring opportunities for collaboration in research or projects 😊. Please do not hesitate to contact me at your convenience!

📝 Publications

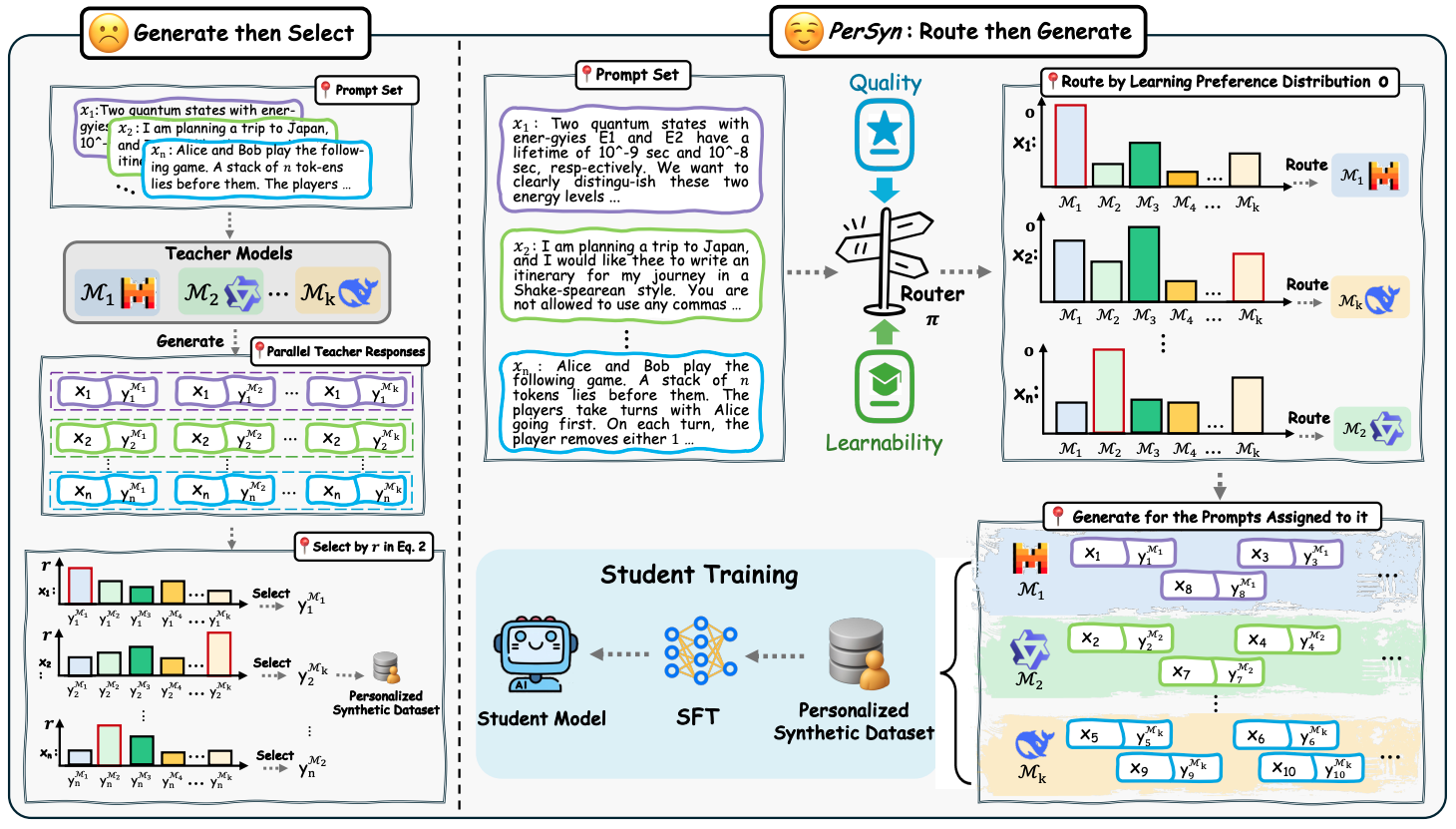

Find Your Optimal Teacher: Personalized Data Synthesis via Router-Guided Multi-Teacher Distillation

Hengyuan Zhang, Shiping Yang, Xiao Liang, et al., Chaofan Tao, Jing Xiong, Hayden Kwok-Hay So, Ruobing Xie, Angel X. Chang, Ngai Wong

| [Paper] | Natural Language Processing, Data Synthesis | Conference Preprint |

- This paper proposes PerSyn, a novel data synthesis strategy that operates under a new “Route then Generate” paradigm to create data tailored to each student model, enabling it to learn more effectively.

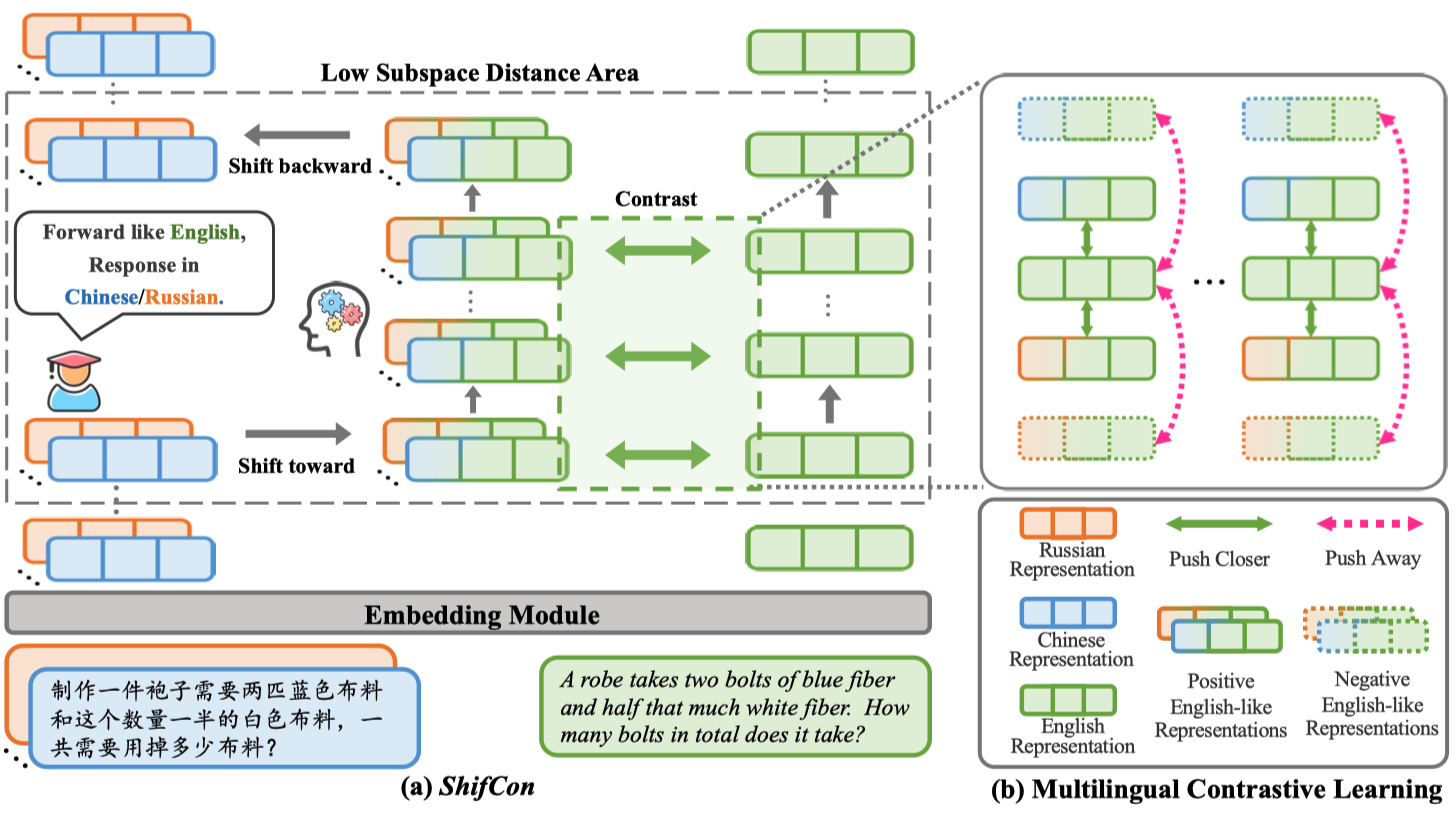

ShifCon: Enhancing Non-Dominant Language Capabilities with a Shift-based Contrastive Framework

Hengyuan Zhang, Chenming Shang, Sizhe Wang, Dongdong Zhang, Feng Yao, Renliang Sun, Yiyao Yu, Yujiu Yang, Furu Wei

| [Paper] | [Code] | Natural Language Processing, Multilingual, Interpretability in Parameter | CCF-A Conference |

- This paper aims to enhance the performance of non-dominant languages by projecting their representations into the dominant language space. We pinpoint the optimal layer area for shifting representations via a subspace distance metric. (OpenReview Score: [4, 4, 4.5])

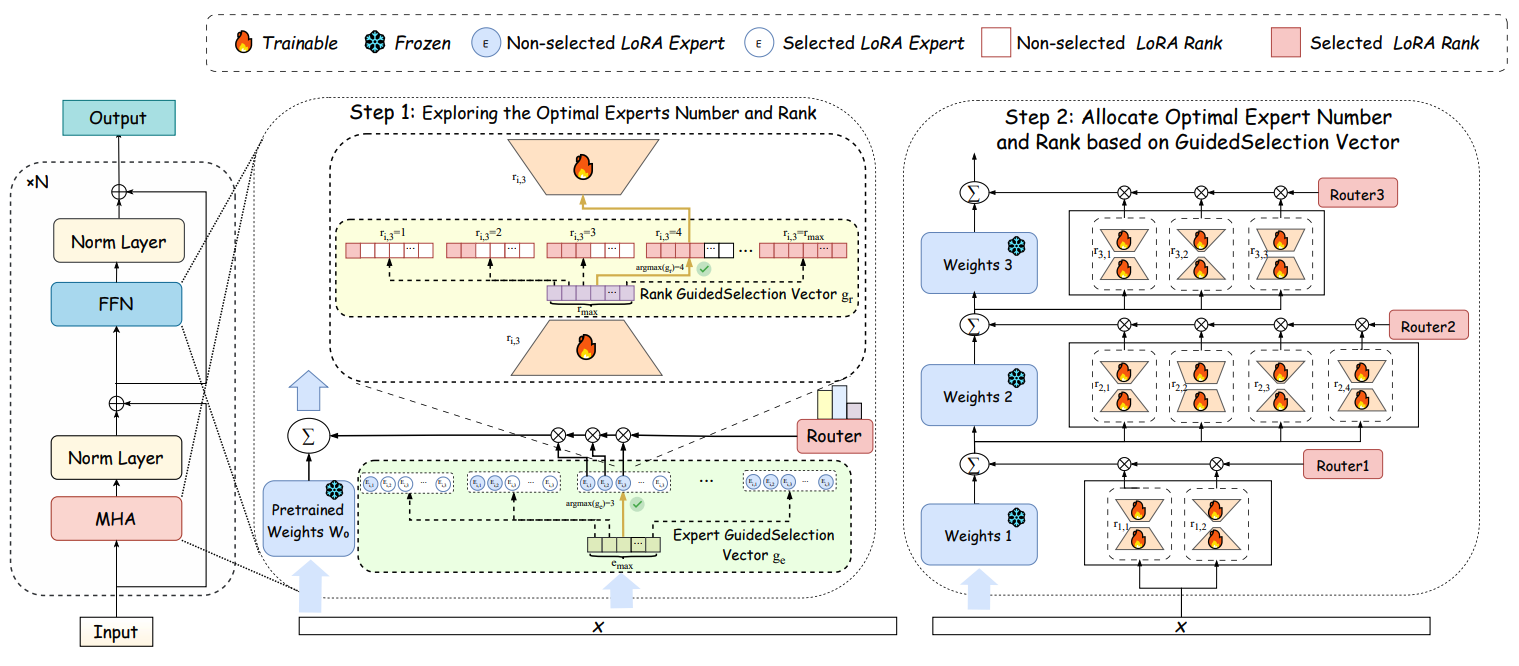

GuiLoMo: Allocating Expert Number and Rank for LoRA-MoE via Bilevel Optimization with GuidedSelection Vectors

Hengyuan Zhang, Xinrong Chen, Xiao Liang, Ziyue Li, et al., Ngai Wong

| [Paper] | [Code] | Natural Language Processing, Fine-tuning Technique, Interpretability in Parameter | CCF-B Conference |

- This paper introduces a fine-grained strategy, i.e., GuiLoMo, for jointly allocating optimal layer-wise expert numbers and ranks in LoRA-MoE based on bilevel optimization with GuidedSelection vectors.</span>

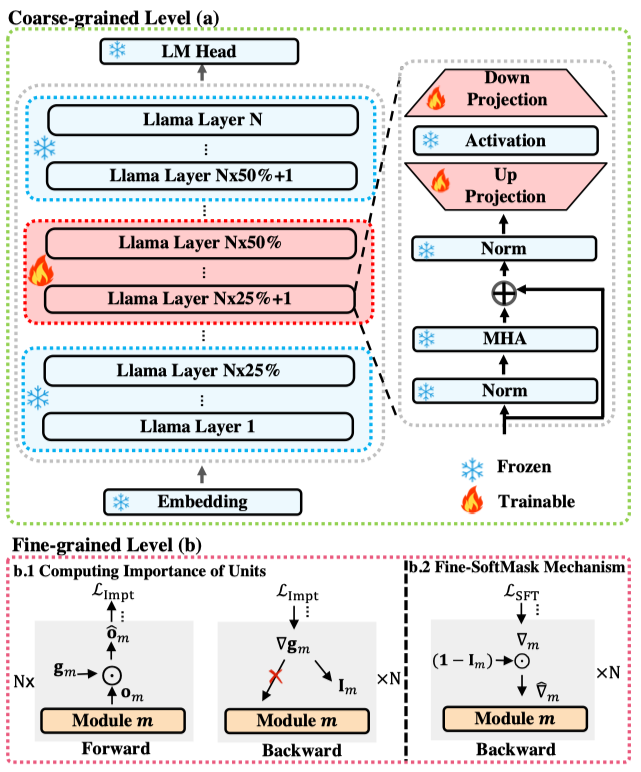

Balancing Speciality and Versatility: A Coarse to Fine Framework for Mitigating Catastrophic Forgetting in Large Language Models

Hengyuan Zhang, Yanru Wu, Dawei Li, Zacc Yang, Rui Zhao, Yong Jiang, Fei Tan

| [Paper] | [Code] | Natural Language Processing, Fine-tuning Technique, Interpretability in Parameter | CCF-A Conference |

- This paper introduces a Coarse-to-Fine Fine-tuning framework (CoFiTune) that strikes a delicate balance between speciality and versatility. It pinpoints and updates specific modules that are crucial for speciality, while keeping other parameters frozen.

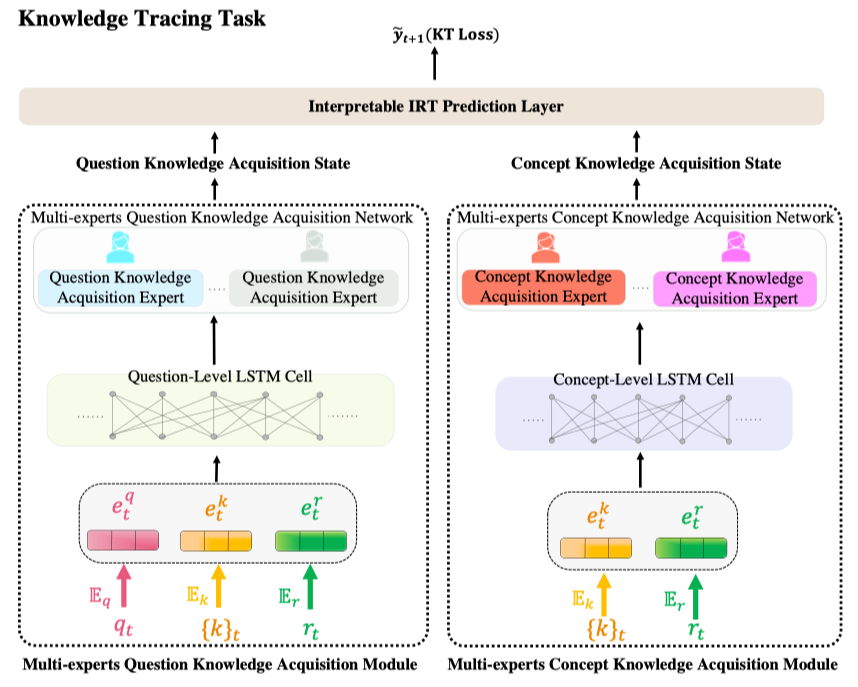

A Question-centric Multi-experts Contrastive Learning Framework for Improving the Accuracy and Interpretability of Deep Sequential Knowledge Tracing Models

Hengyuan Zhang, Zitao Liu, Chenming Shang, Dawei Li, Yong Jiang

| [Paper] | [Code] | Data Mining, Education Recommendation, Interpretability in Prediction | JCR Q1 Journal |

- This paper proposes Q-MCKT framework, which utilizes an item response theory-based prediction layer to generate interpretable prediction results by simultaneously modeling knowledge acquisition and question difficulty.

- NeurIPS 2025

SwS: Self-aware Weakness-driven Problem Synthesis in Reinforcement Learning for LLM Reasoning

Xiao Liang, Zhong-Zhi Li, Yeyun Gong, Yang Wang,Hengyuan Zhang, Yelong Shen, Ying Nian Wu, Weizhu Chen

[Paper] | Natural Language Processing, Data Synthesis | CCF-A Conference

- ACL 2025

Chain-of-Reasoning: Towards Unified Mathematical Reasoning in Large Language Models via a Multi-Paradigm Perspective

Yiyao Yu, Yuxiang Zhang, Dongdong Zhang, Xiao Liang,Hengyuan Zhang, et al., Yujiu Yang, Furu Wei

[Paper] | Natural Language Processing, Fine-tuning Technique | CCF-A Conference

- EMNLP 2025

TreeReview: A Dynamic Tree of Questions Framework for Deep and Efficient LLM-based Scientific Peer Review

Yuan Chang, Ziyue Li,Hengyuan Zhang, Yuanbo Kong, Yanru Wu, Zhijiang Guo, Ngai Wong

[Paper] | Natural Language Processing | CCF-B Conference

- NAACL 2025

BPO: Towards Balanced Preference Optimization between Knowledge Breadth and Depth in Alignment

Sizhe Wang, Yongqi Tong,Hengyuan Zhang, Dawei Li, Xin Zhang, Tianlong Chen

[Paper] | Natural Language Processing, Fine-tuning Technique | CCF-B Conference

- Preprint 2025

Quantifying the Robustness of Retrieval-Augmented Language Models Against Spurious Features in Grounding Data

Shiping Yang, Jie Wu, Wenbiao Ding, et al.,Hengyuan Zhang, Dongmei Zhang

[Paper] | Natural Language Processing, Phenomenon Analysis | Conference Preprint

- Preprint 2024

Improving Low-Resource Knowledge Tracing Tasks by Supervised Pre-training and Importance Mechanism Fine-tuning

Hengyuan Zhang, Zitao Liu, Shuyan Huang, Chenming Shang, Bojun Zhan, Yong Jiang

[Paper] | [Code] | Data Mining, Education Recommendation | Journal Preprint

- Preprint 2024

Compositional Generalization Through Neuroscience-inspired Geometric Constraints on Representation Structure

Chenming Shang, Shiji Zhou,Hengyuan Zhang, Xinchen Zhang, Lei Ke, Yuwang Wang, Yujiu Yang

[Paper] | Computer Vision, Cognitive Science, Interpretability in Representation | Conference Preprint

- CogSci 2024

Understanding Multimodal Deep Neural Networks: A Concept Selection View

Chenming Shang,Hengyuan Zhang, Hao Wen, Yujiu Yang

[Paper] | Computer Vision, Cognitive Science, Interpretability in Prediction | CCF-B Conference

- CVPR 2024

Incremental Residual Concept Bottleneck Model

Chenming Shang, Shiji Zhou,Hengyuan Zhang, Yujiu Yang, Xinzhe Ni, Yuwang Wang

[Paper] | [Code] | Computer Vision, Cognitive Science, Interpretability in Prediction | CCF-A Conference

- EMNLP 2023

Multi-level Contrastive Learning for Script-based Character Understanding

Dawei Li,Hengyuan Zhang, Yanran Li, Shiping Yang

[Paper] | [Code] | Natural Language Processing, Cognitive Science | CCF-B Conference

- ACL 2023 BEA

Assisting Language Learners: Automated Trans-Lingual Definition Generation via Contrastive Prompt Learning

Hengyuan Zhang, Dawei Li, Yanran Li, Chenming Shang, Chufan Shi, Yong Jiang

[Paper] | [Code] | Natural Language Processing, Education, Interpretability in Representation | CCF-A Conference

- AACL 2022(Oral)

Fine-grained Contrastive Learning for Definition Generation

Hengyuan Zhang, Dawei Li, Shiping Yang, Yanran Li

[Paper] | [Code] | Natural Language Processing, Education, Interpretability in Representation | Conference

💻 Interships

Baidu, Search Strategy Lab, Beijing

Baidu, Search Strategy Lab, Beijing

- Mar. 2021 - Jul. 2021, Engineering Intern

Xiaomi, AI Lab, Beijing

Xiaomi, AI Lab, Beijing

- Mar. 2022 - Sept. 2022, Research Intern

Tencent, AI Lab, Shenzhen

Tencent, AI Lab, Shenzhen

- Mar. 2023 - Jul. 2023, Research Intern

Sensetime, Research, Shenzhen

Sensetime, Research, Shenzhen

- Aug. 2023 - Mar. 2024, Research Intern

Microsoft Research Asia, NLC Group, Beijing

Microsoft Research Asia, NLC Group, Beijing

- Mar. 2024 - Dec. 2024, Research Intern

- I got the “Microsoft Stars of Tomorrow” Award during the internship

🏅 Selected Honors and Awards

👉 Tsinghua University Comprehensive First-Class Scholarship (Top 3%, RMB ¥ 10,000) | 2024

👉 Tsinghua University General Excellence Scholarship (Top 5%, RMB ¥ 4,000) | 2023

👉 National Scholarship (Top 1%, 3 Times, RMB ¥ 24,000) | 2019, 2020, 2021

👉 Outstanding Graduate Student of Beijing (Top 3%) | 2022

👉 Excellent League Member of Beijing (Top 3%) | 2021

👉 Merit Student of Beijing (Top 3%) | 2021

👉 Meritorious Winner of Interdisciplinary Contest in Modeling (Top 5%) | 2021

👉 Computer Design Competition National Second Prize (Top 5%) | 2020

👉 CUMCM-Beijing Area First Prize (Top 5%) | 2020

👉 Xiaomi Third Hacker Marathon Excellence (Top 7%, RMB ¥ 3,000) | 2022

📌 Miscellaneous

- I once led the Academic Department of SIGS Student Union at Tsinghua University. During which, I organized academic activities such as academic forums and experience-sharing sessions. I am also a member of the Beijing Xiamen ECC (北京厦门企业商会), actively participating in sharing activities [Link].

- I also participated in social activities such as rural revitalization [Photo], representing Tsinghua in a Swiss “Global Warming” forum [Photo], and helping international students with Chinese, computer, and math [Photo].

- I am actually a person with a strong desire to share. In my spare time, I like writing blogs and sharing experiences on [Rednote], [Wechat Official Account], and [Bilibili] (阿源的NLP碎碎念). The selected blogs are as follows:

- Interpreting Arithmetic Calculation Modules within LLMs

- Interpreting Security Modules within Large Language Model

- Do Llama Work in English?

- Interpreting Linguistic Regions with in LLMs

- Prevent Catastrophic Forgetting via SoftMask Mechanism

- The Key Components in Transformer

- The Evaluation of Instruction Following

- Skill Localization of Large Language Model

- iMAge-guided Text GeneratIon with CLIP

- I used to be a guitarist 🎸 in a band when I was in high school. Also, I love playing badminton 🏸, table tennis 🏓 and, soccer ⚽️. During holidays, I will also seize any opportunity to travel around the world ⛳️.